November 17, 2021, 0 Comments

Easy Tips to Fix The Common Indexing Errors with Google Search Console

It’s no secret that Google is the most popular search engine in the world. As a result, it is very important for any company that has an online presence to have its website indexed by Google. And yet, many companies are not aware of the common errors they may be making with their site which can lead to Google not indexing them at all. This blog post will outline some easy tips you can follow so your website is listed on Google and ranked higher than competitors!

Start With The Basics

To start with, make sure your website content hasn’t been blocked due to robots.txt or meta tags. If this does happen, contact your webmaster immediately as they will need to edit these files on your server first before the problem can be fixed.

Once you’ve sorted this out, make sure your site’s pages are linked to correctly through other sites. Your content shouldn’t only show up on your own website but also on review sites, social media platforms and blog posts. Don’t just link it once though! Linking the same page multiple times will not only boost the SERP (Search Engine Result Page) but will also help Google index your content.

Google Ranking Algorithm

Now, onto the more advanced stuff! There are two different ranking algorithms that Google uses: Panda and Penguin. The latter was released in 2012 and is responsible for demoting websites with duplicate or poor quality content while the former water Guidelines If you want to have a higher ranking with Google, you need to have great content that people will enjoy. In turn, this will lead to more backlinks and build your online presence as a brand! Google’s Rater Guidelines give webmasters insight as to what is important for the search engine in order for it to be indexed by them.

Google Indexing Errors

Now that you know what Google wants to see in a website, it’s time to look at common indexing errors which webmasters make.

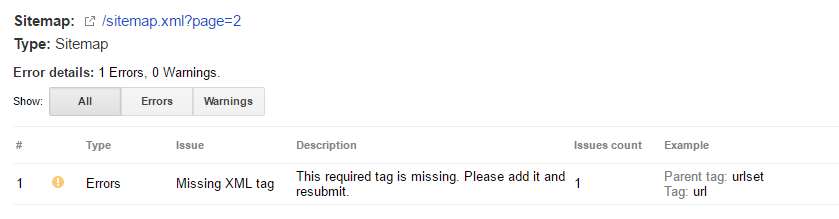

Missing Sitemap

This is the number one error when it comes to Google indexing because it results in no indexed content! In order for this not to happen, make sure you have a sitemap on your website.

Broken Links

When broken links are found, Google will ignore the webpage in question completely which means its content won’t be indexed either. Broken links can have a huge impact on your website so you need to make sure they are fixed immediately. You should also check the backlinks of the pages which contain them because they are likely to be ignored by Google as well.

Duplicate Content

If your content is showing up on other websites without proper attribution, that’s a red flag for the search engine and it won’t index your website properly. If you see syndicated content on another site that is claiming to be original, contact the webmaster so they can add a no-follow tag to the link.

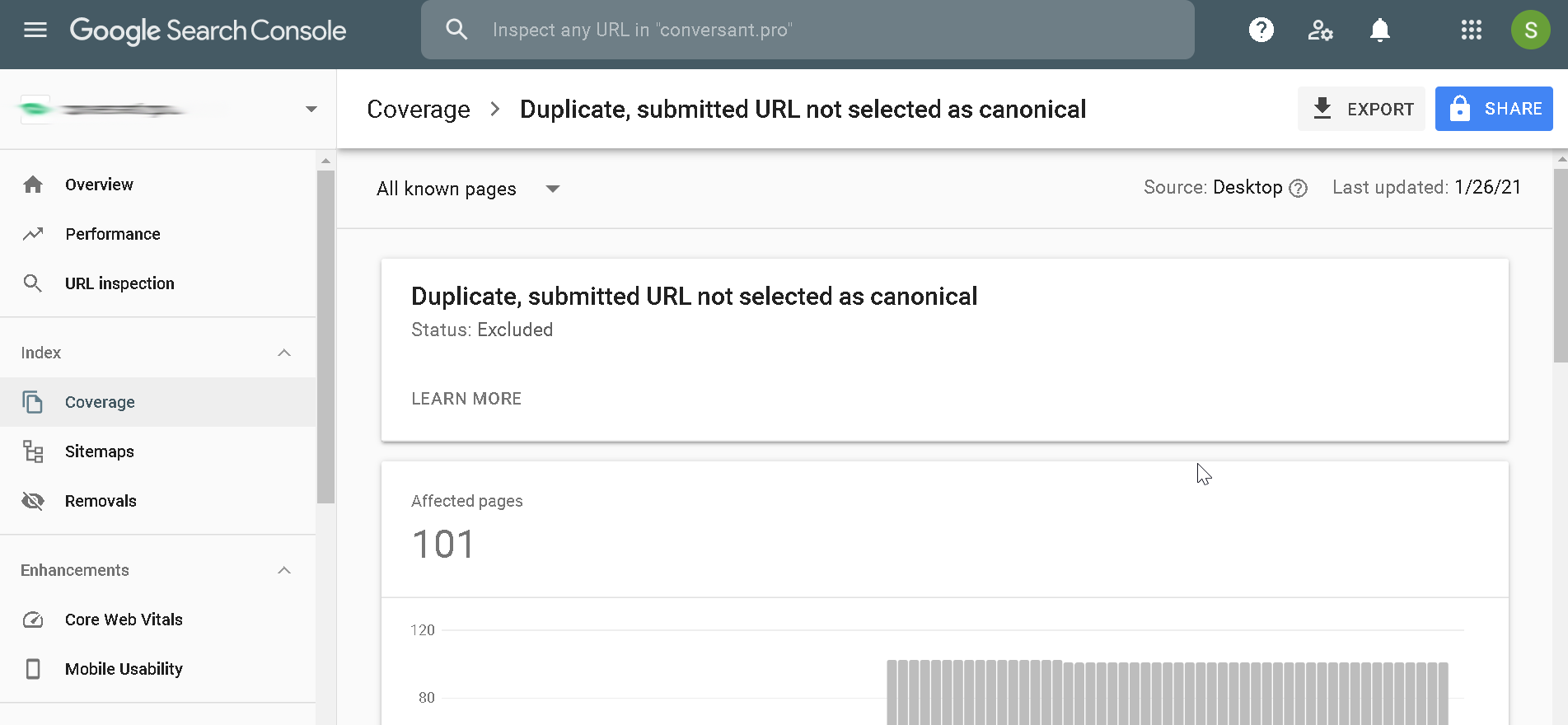

How To Find Indexing Errors With the Webmaster Coverage Report

The Index Coverage report – formerly Crawl Stats – is a way to view all of the pages on your site that Google has successfully indexed. If you want an idea as to whether or not there are errors in those areas, then go ahead and take advantage!

1) Log into your account using google’s web browser

2) Search Console should be open by default

3). Click “Index > Coverage”

4), From here click “Error tab”. Ideally this screen will read something like ‘no errors found’.

If you are still unsure as to whether or not your website has been indexed by Google, go to the ‘Search Traffic > Search Analytics’ menu. Once there, click on the ‘Performance’ tab and then the ‘Coverage’ sub-tab. This report shows how many pages of your site have actually been indexed by Google.

How To Fix Indexing Errors

Once you’ve found the issue, it’s time to fix it! Follow these steps:

Save your content locally (on your computer) and compare it to the live version on your website; Search engines will read local files first so make sure they are identical; If there is no difference, check the file name on the website itself – the misspelling might have been made there; If you’re still having trouble, upload a different version of the file to your site and see if that works.

Please note: When dealing with indexing errors, it’s important to remember that patience is key! You need to try every solution possible before giving up because Google takes its time with everything it does.

If you’re still having trouble after making the necessary changes, try to contact Google directly through Search Console. You can either go through the ‘Help’ tab or search their knowledge base for specific instructions on your case. There is also a detailed guide on indexing errors here

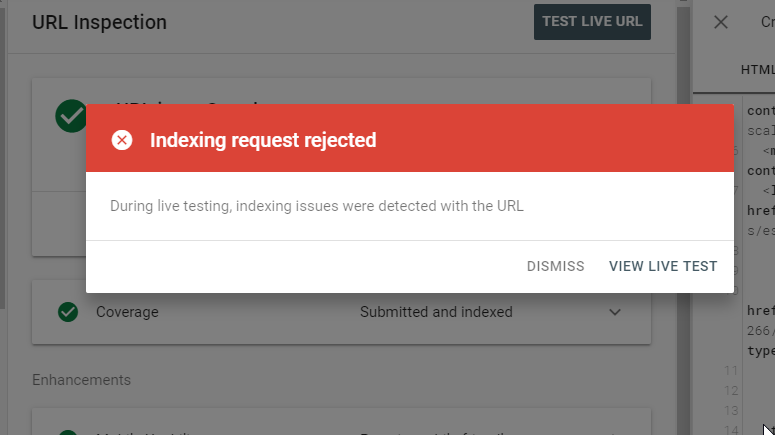

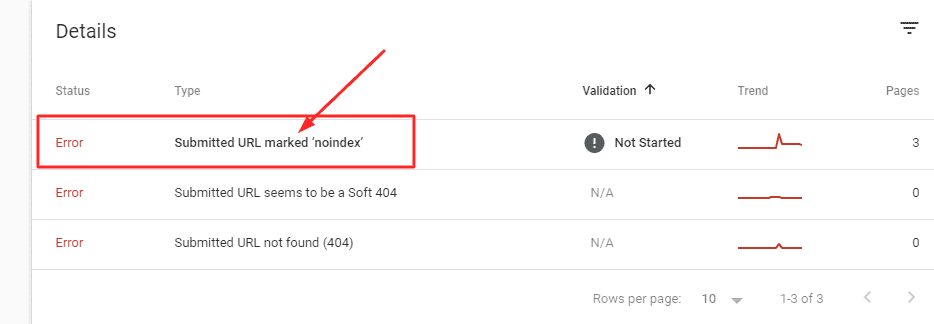

Submitted URL marked ‘noindex’

Search engines will not list a page if it has been tagged ‘noindex’. This is because you want to make this content publicly accessible and search engine programs should take priority over anything else, even websites that may have links from other sites pointing towards them in order for those pages be ranked higher on Google’s rankings list.

There are two ways that pages can be blocked with ‘noindex’. The first way is by including a meta tag on your website, and the second would be sending an ‘X-No-Index’ header via HTTP requests.

I’ll show how to diagnose this problem in general terms below for quick reference:

If you’re sure it’s not something else causing these errors (a server error?), then consider removing any unnecessary content from around those regions – or just leave them alone altogether if indexing doesn’t seem important enough!

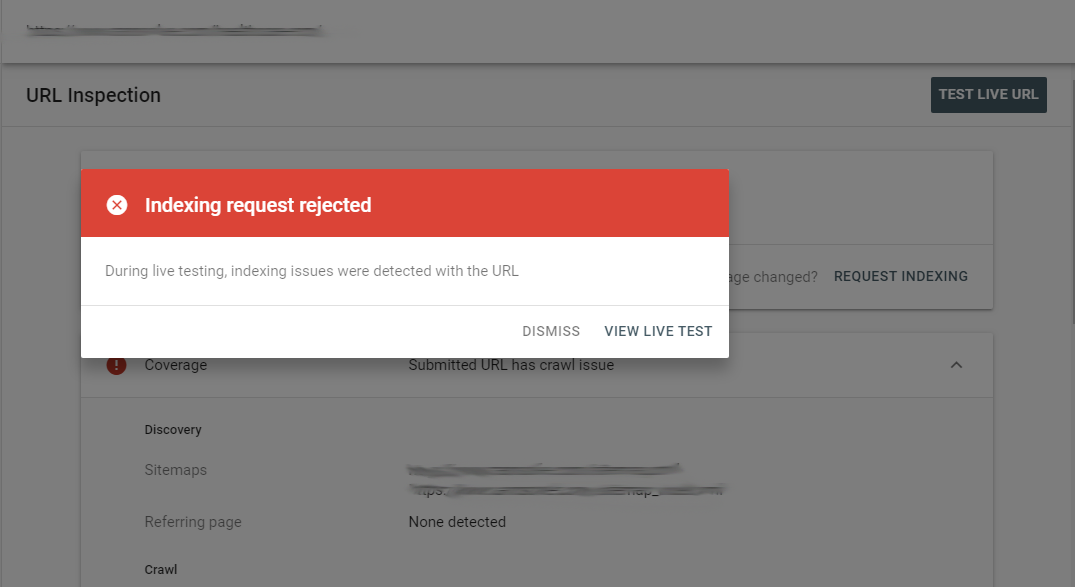

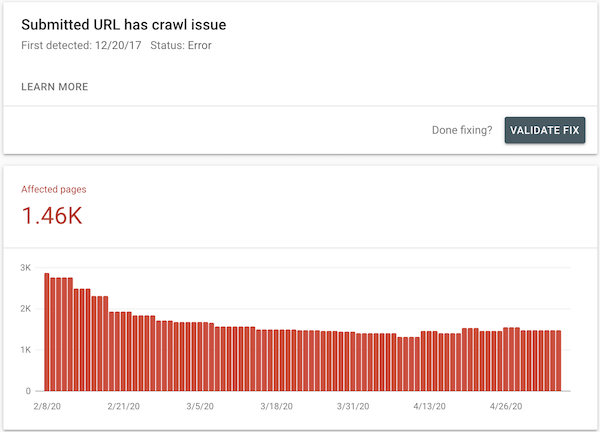

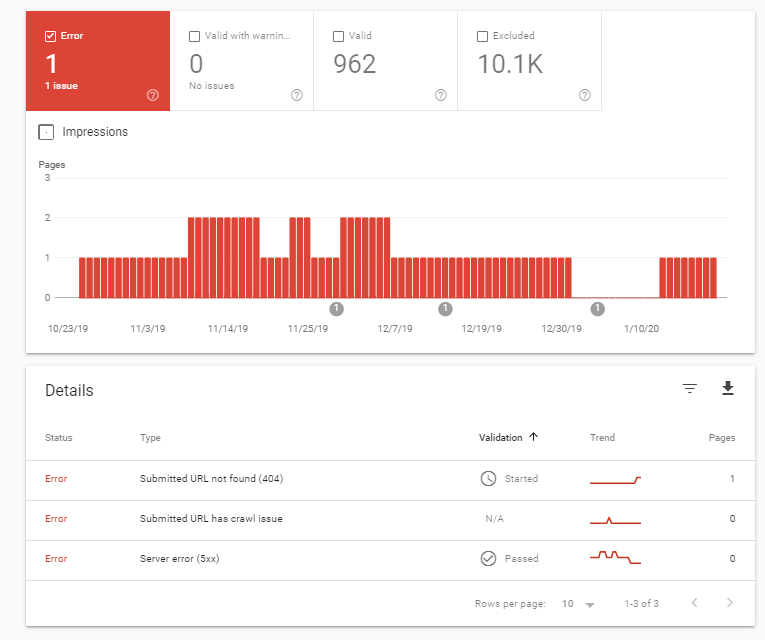

Submitted URL has crawl issue

The crawler will see your entire website as a collection of links and they need to be able to follow those links without errors.

According to Google’s page, the most common crawl error is when “URLs are blocked by robots.txt”.

There are a variety of reasons why you might see an error message like the one above. It could be due to things such as “noindex” or other meta tags on your website, page loading errors during indexing attempts (such images not being loaded), and even if there is no problem at all with these issues but rather something else entirely blocking Google from reaching its full potential under certain circumstances-in which case we recommend looking into fixing that instead! You can use Search Console’s URL Inspection feature for help narrowing down what exactly caused this so they don’t happen again in future.

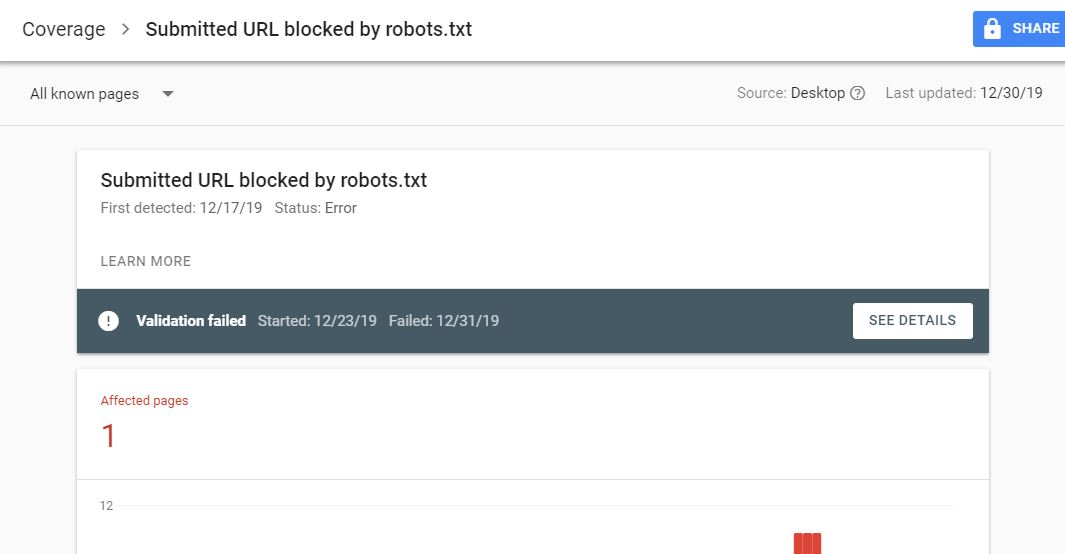

Submitted URL blocked by robots.txt

There are a number of reasons why you could be blocked by ‘robots.txt’ other than your website being indexed, but it can often have something to do with this overall – so how about we go ahead and fix that?

In order for this issue to be resolved, you will need to remove any reference to the blocked URLs from your website in the robots.txt file.

When you want certain pages of your website to be excluded from search engine results, adding that information into robots.txt can help outbound traffic stay away and allow them to rank higher on their own without the need for indexing by Google. However, there are always some exceptions where an error message will appear if they’re trying to access one specific location which does not exist in this file so make sure it’s okay before deleting anything!

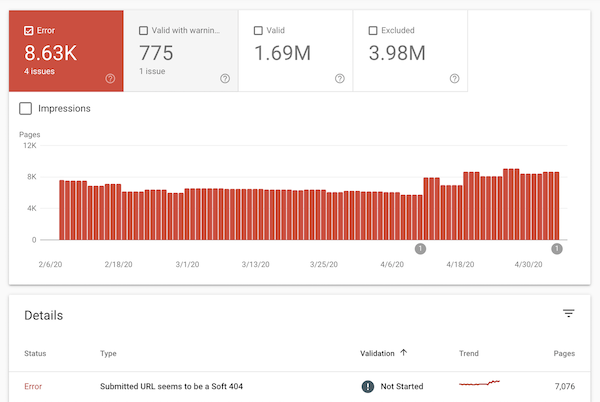

Submitted URL seems to be a soft 404

If you were to go over a link on your website and the page ‘disappears’ or is not found, it may possibly be a soft 404 being displayed. A soft 404 can be interpreted as an error from Google’s perspective because the crawler usually enters a webpage with the assumption that it will have some content, but doesn’t.

If your website’s pages are not ‘hard’ 404s, you can check and see what this error may be due to. If the reason why they’re missing is because of a broken link or reference then that should be fixed ASAP! However, if it’s nothing at all to do with the actual content on these pages and instead just a matter of incorrect linking then that might be something you’ll need to deal with another time. You can use Google Webmaster Tools for more information on this as well as any other errors you come across such as those listed above.

Server error (5xx)

Server errors can be a major pain in the butt, especially when Google cannot access your page because of an issue with their server.

When a server responds with a 5xx status, this will render the page inaccessible to Googlebot and not all of their attempts to fix this issue will work because it’s being handled by another system altogether.

In order for Googlebot to access the content on your site again, you will need to get in touch with your hosting provider and work towards resolving the server error(s) causing this problem.

Submitted URL not found (404)

Whenever you see the “not found” type of error, this usually means that Googlebot was trying to access a specific location on your website and they got the message in return.

One example would be when typing in an incorrect URL or misplacing a certain character/word somewhere along the way which caused their crawler to get lost in the process.

If this is the case, then you have 2 options to fix it: either create a new page that has the right information or edit an existing one with similar content while adding any extra details that might be missing. Beyond this, it’s really all up to what caused the issue to begin with!

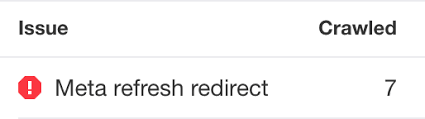

Submitted URL has a META refresh tag

Your website comes across as very slow and unnatural because of this issue if Google can not load the resources it needs to keep crawling.

When the “META refresh” command is used, this forces users to click on another link before anything else can happen, making your site appear much slower than usual and giving them a poor user experience overall.

In order to fix this issue, you will have to remove the ‘META refresh’ command from your website so Google can successfully crawl and index it as intended without any further interruptions.

Submitted URL contains an infinite-looping redirect

What we typically see here is a site that uses excessive 301 redirection, which works in a way that each time users land on a page within this site, it redirects them to another location again without moving too far from where they started.

In order for Googlebot to successfully crawl/index your website when this is the case, you will need to either remove the infinite-looping redirect command or change it to a 302 status which only redirects users when they click on a certain link.

Submitted URL contains a duplicate page

If you want Google’s crawler to properly index your website and separate the pages accordingly, then removing any unnecessary duplication is necessary for this process to go as planned. Duplication can occur when there are multiple versions of the same URL or when they’re linking to a location that Google cannot access (404 error type).

When this happens, you will need to edit your site and remove any content that does not seem necessary. Afterwards, you can run a check on the pages within your website via Search Console to find out what might be causing Google’s crawler to become confused.

Crawling URL not allowed by robots.txt If there is no volume on traffic for an individual page, Google usually leaves it out of the algorithm since this is indicative of users not being too interested in the content at hand.

When your website doesn’t have many visitors who are aware of what’s going on within certain areas, Google will pick up on this and you are likely to see the “URL not allowed” error type.

In order for Googlebot to crawl your website properly again, you will need to tell them what pages they’re allowed to access by adding them to the “robots.txt” file within your root directory/folder.

Google Search Console notifies you with important messages when something is wrong, so it’s always in your best interest to check on this tool whenever you notice any signs of Google indexing errors.

Conclusion

Keep in mind that these are just some of the common errors you might come across with Google Search Console, so if you see any other alerts then be sure to report it as soon as possible.

At the end of the day, this will save you a lot of time and effort when trying to run a successful SEO campaign for your website which is focused on improving the overall experience your users receive while using it.

If you want to know more about indexing errors and how they can affect you, then I recommend checking out this video by Mike King :

Recent Comments